An Introduction to Montplex Cache

Montplex Cache is the most cost-efficient caching service over the world, designed with two core objectives: to significantly reduce operational costs and to prioritize a Bring Your Own Cloud (BYOC) strategy. Montplex Cache has re-architected traditional Redis by decoupling storage to EBS and S3 object storage, while maintaining protocol compatibility with Redis. This innovative approach delivers up to 10x cost advantages for users and nearly infinite scalability, far surpassing traditional Redis clusters.

Differences with Open Source Redis® / Valkey

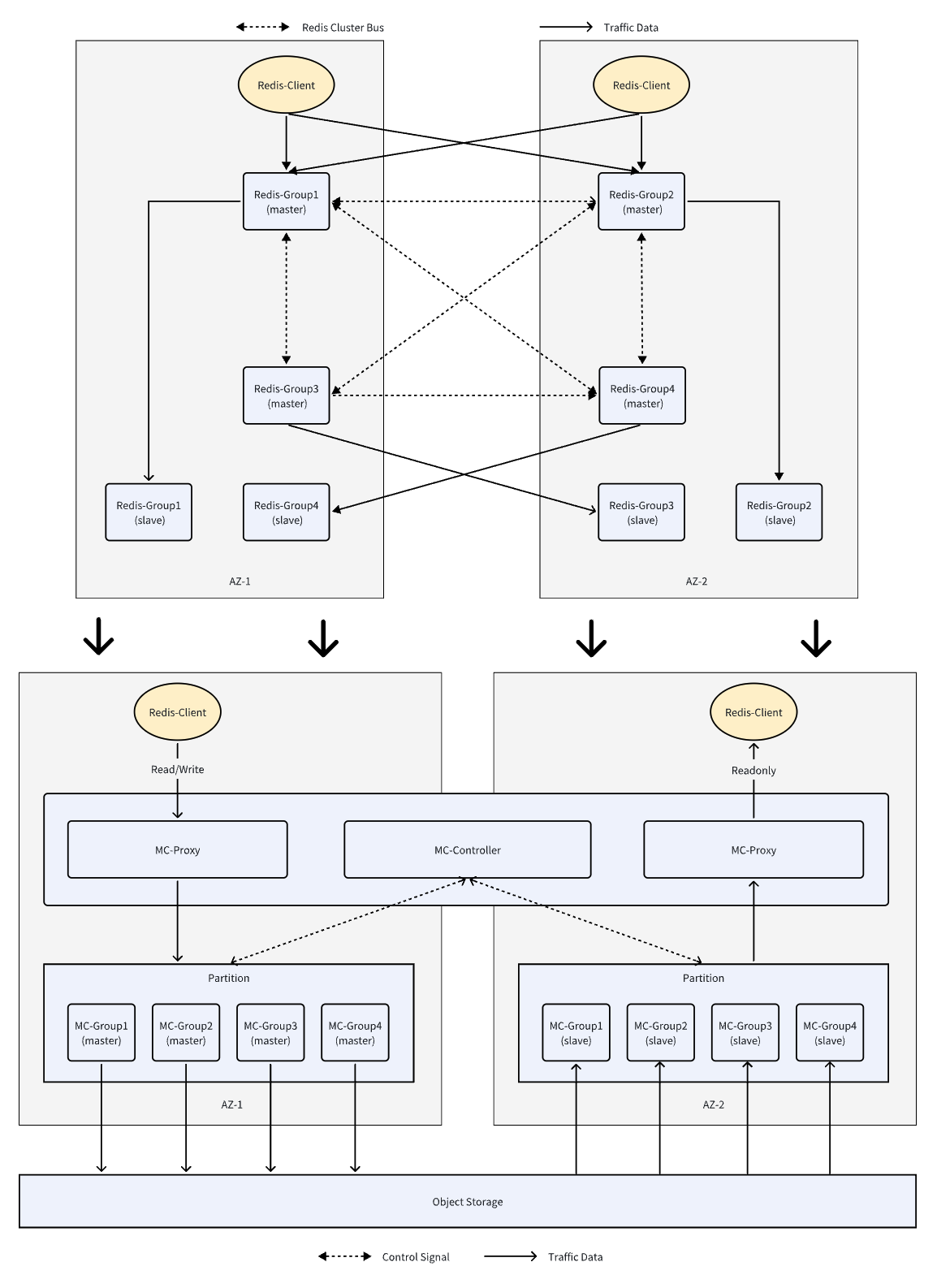

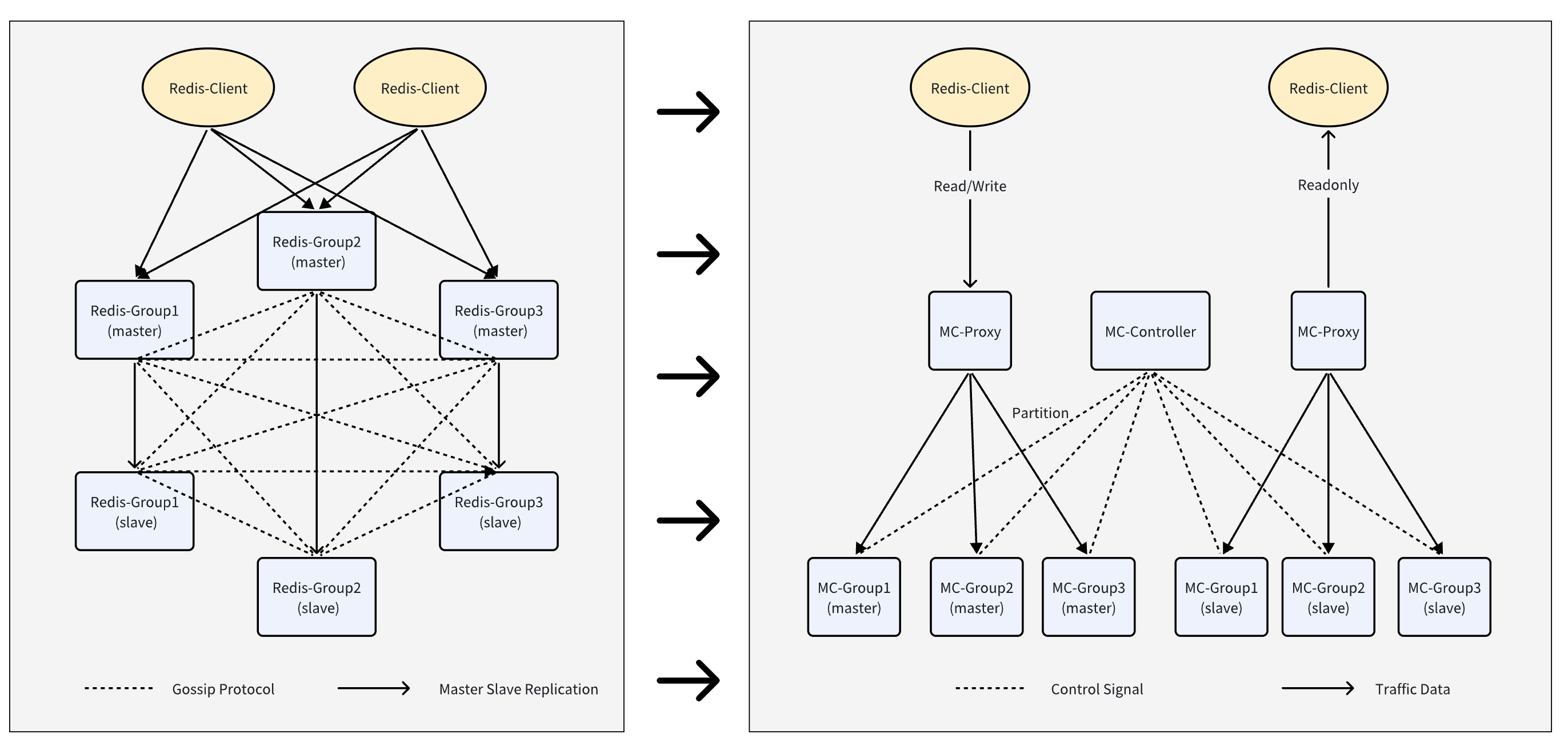

Traditional open source Redis deployments follow a shared-nothing architecture, storing data on local disks and relying on master-slave replication mechanisms to provide high availability. While this architecture offers caching abstraction for applications, it faces many limitations in the public cloud environment. For instance, when deployed across availability zones, master-slave node replication incurs significant cross-AZ data transfer costs. Montplex Cache takes an innovative shared-storage approach, decoupling compute and storage layers. It leverages object storage services like EBS and S3 as the persistent data store, replacing Redis local log storage. By separating storage from compute, Montplex Cache unlocks the scalability, availability, and cost-efficiency advantages of cloud object storage services, far surpassing the capabilities of traditional Redis clusters constrained by local disks.

Shared-Storage Architecture

Redis clusters can face performance bottlenecks and availability issues due to the blocking nature of the BGSAVE operation used for data persistence. Under high load, BGSAVE can overwhelm the primary node, causing follower nodes to timeout and repeatedly trigger synchronization requests, creating a vicious cycle that impacts the entire cluster. Additionally, OSS replication to follower nodes can potentially trigger BGSAVE, which under high load situations, may lead to further performance degradation on the already busy primary node due to synchronization requests from followers, as well as prolonged BGSAVE generation times causing timeouts and repeated sync requests in a vicious cycle.

To mitigate these challenges, Montplex Cache employs a shared-storage architecture that decouples compute and storage layers. This design frees applications from the limitations of local disks and the disruptions caused by BGSAVE operations.

Moreover, Montplex Cache enables capabilities like seamless data backup/restore and read-replica provisioning without blocking operations that impact Redis's master-slave replication or snapshot backups.leveraging object storage's multi-AZ and multi-region deployments, Montplex Cache can efficiently synchronize data across availability zones and cloud providers, facilitating cross-AZ failover clustering and cross-cloud disaster recovery - delivering truly global, uninterrupted services. Montplex Cache's shared-storage design frees applications from the limitations of local disks, providing unmatched scalability, availability, and cost-effectiveness for Redis workloads in the cloud era.

Cost-Oriented Optimization

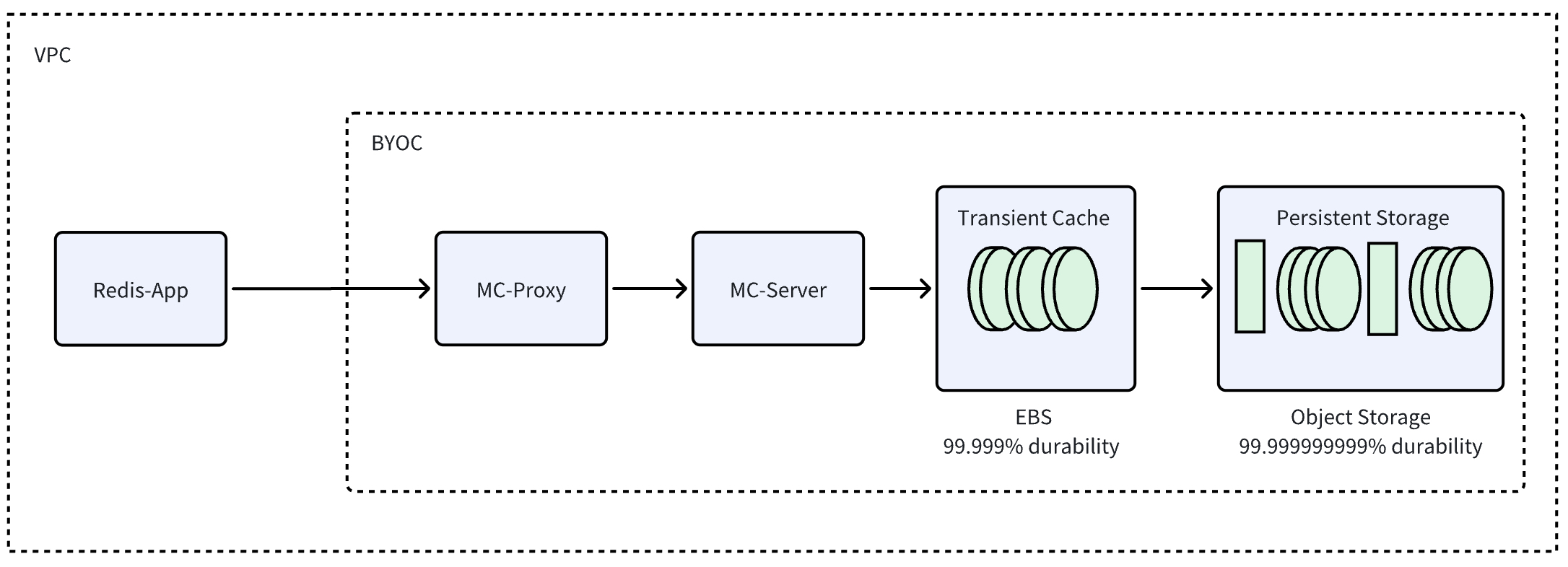

By separating compute and storage, Montplex Cache eliminates the need for cross-node data replication during write operations, substantially reducing inter-node traffic and resource contention.Montplex Cache's innovative design leverages a fixed EBS (Elastic Block Store) footprint per node, dedicated to storing recent AOF (Append-Only File) logs. Periodically, complete data node images are generated and stored in S3 object storage. This fixed EBS design maximizes the cost-effective utilization of public cloud S3 storage, resulting in significant cost savings. Moreover, if business requirements do not necessitate read-replica nodes, Montplex Cache can eliminate them entirely, further reducing costs. In the event of a primary node failure, MC-Controller can seamlessly provision a new data node on a healthy node in less than a minute, which could be located in a different Availability Zone, Region, or even a different cloud provider (in development). Furthermore, Montplex Cache Engine is actively investing in memory compression research and development. Before data enters S3 storage,the Montplex Cache Compressor, which is the Montplex Cache Engine's compression module, analyzes the cache workload and dynamically trains a compression dictionary tailored for the zstd algorithm. Depending on the workload characteristics, this innovative approach can achieve memory compression ratios ranging from 10% to 50%, albeit with a 10% performance trade-off. From its inception, the Montplex team recognized the potential of Arm architecture. As a result, half of the company's servers employ Arm-based processors. All Montplex software is meticulously optimized for Arm, enabling the platform to deliver 90-95% of the performance of Intel-based systems with equivalent configurations. Additionally, all software undergoes comprehensive testing on both x86 and Arm architectures to ensure stability and performance meet the expected standards across both platforms.Considering that most public cloud providers offer Arm instances at a 20-30% lower price point compared to Intel/AMD alternatives, Montplex Cache's Arm optimizations can result in significant cost savings for users. By eliminating cross-node replication overhead, leveraging cost-effective object storage, implementing memory compression techniques, and optimizing for Arm architecture, Montplex Cache delivers substantial cost advantages over traditional Redis deployments, making it an attractive choice for cost-conscious organizations seeking high-performance caching solutions in the cloud.

BYOC

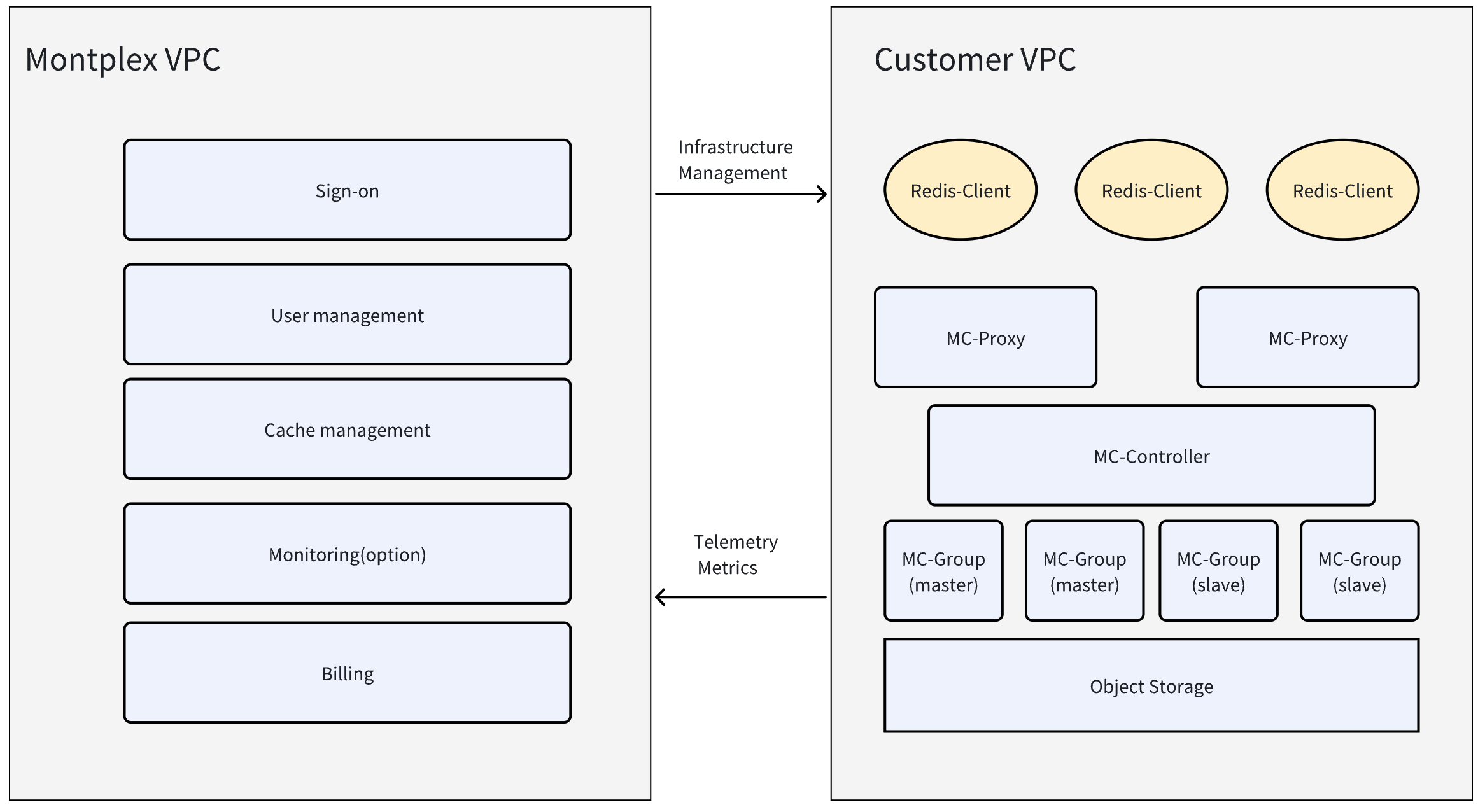

The Bring Your Own Cloud (BYOC) model has emerged as a compelling solution, empowering organizations to harness the advantages of fully managed services while retaining data sovereignty, optimizing costs through existing cloud discounts, enhancing security with dedicated private environments, and enabling low-latency access, all while allowing them to focus on core business objectives. BYOC offers several advantages over fully managed cloud services. For instance, with fully managed services, setting up VPC Peering (Virtual Private Cloud Peering) can introduce security concerns and challenges in planning CIDR (Classless Inter-Domain Routing) address spaces. To mitigate these issues, organizations may need to use services like AWS PrivateLink, which can incur substantial additional costs.

Montplex Cache BYOC deployment mode allows organizations to use their own cloud infrastructure instead of relying on the managed cache service. Montplex Cache's solution tightly integrates with AWS, GCP, and Azure, ensuring optimized performance and security.

Montplex Cache currently support AWS as the cloud platform. GCP and Azure integration is in development and will be available soon.

During the initialization of the hardware environment, Montplex Cache creates a special IAM role with permission boundaries that will allow managing resources in organizations' accounts, such as virtual machines, disks, VPCs, and others.By granting permissions to this account, Montplex Cache can provide auto-scaling capabilities based on load, as well as high availability across availability zones and regions.

Users can restrict and adjust the permissions of this IAM account through the Montplex console, ensuring that the account is used only within their VPC and maintaining the security of the account's privileges. This approach guarantees that organizations retain control over their cloud infrastructure while benefiting from Montplex Cache's managed services.

The BYOC model ensures data sovereignty, compliance, and enhanced security by keeping organizations' data within their private cloud environment while offering low-latency access. It allows organizations to leverage existing cloud discounts, avoid data transfer costs, and reduce infrastructure expenses. Additionally, it provides a fully managed experience, enabling organizations to focus on their core business while Montplex Cache handles deployment, upgrades, monitoring, and maintenance of the cache clusters.

Unlimited Scale

Redis Cluster's native sharding capabilities are limited to a maximum of 1024 shards due to the overhead of the Gossip protocol used for inter-node state synchronization. As the number of shards increases, the communication overhead of the Gossip protocol grows significantly, constraining the system's maximum shard count. Montplex Cache circumvents this limitation by introducing a centralized controller architecture, inspired by Google's Placement Driver, that coordinates shard management across the cluster. This component, known as MC-Controller, is responsible for distributing data efficiently, ensuring high availability, and maintaining data consistency across all nodes. This design decision benefits from the rich experience of Montplex Cache's founding team, which stems from the early TiDB kernel team at PingCAP, where they gained extensive expertise in building and deploying large-scale distributed databases.

By decoupling shard coordination from the data nodes, MC-Controller ensures that data operations remain highly performant and unaffected by metadata management overhead, even at massive scales. Montplex Cache eliminates the overhead of cross-AZ synchronization in Redis master-slave replication. Furthermore, MC-Controller is designed to support cross-AZ deployments (in development), with the entire cluster's metadata stored in the controller and the data residing on EBS and S3 storage. This architecture significantly reduces costs while maintaining disaster recovery capabilities on par with direct synchronization approaches.

Compatible with Redis® / Valkey

The essence of Redis compatibility lies in adhering to the RESP protocol, and Montplex Cache continuously prioritizes compatibility with both Redis and Valkey communities. This compatibility is a cornerstone of our product development strategy, ensuring seamless interoperability. By integrating the native compute layers of OpenSource Redis and Valkey into its cloud-native caching solution, Montplex Cache achieves data manipulation commands protocol compatibility through careful identification of minimal storage integration points. In terms of cluster mode, Montplex Cache currently employs a Proxy-based solution, which, apart from some differences in management commands, maintains a high degree of consistency with the behavior of data manipulation commands in the redis-cluster mode, thereby significantly reducing the migration cost. This approach enables Montplex Cache to adapt and achieve protocol alignment within one month of new Redis or Valkey releases, ensuring seamless synchronization with the latest updates from both communities.

Conclusion

Montplex Cache presents a compelling caching solution, re-architecting traditional Redis with a shared-storage approach that unlocks the scalability, availability, and cost advantages of cloud object storage. Its innovative Placement Driver like architecture eliminates scalability bottlenecks, enabling truly unlimited scale without compromising performance. Through cost-oriented optimizations like leveraging cost-effective storage, memory compression, and Arm optimizations, Montplex Cache delivers substantial cost savings over traditional Redis deployments. Moreover, its BYOC support empowers organizations with data sovereignty, existing cloud discounts, enhanced security, and low-latency access. By achieving near-complete Redis®/Valkey protocol compatibility for data manipulation commands, Montplex Cache ensures seamless integration and migration while leveraging the latest community updates. With its innovative architecture, unlimited scalability, cost-effectiveness, and compatibility, Montplex Cache emerges as a future-proof caching solution for the cloud era.